Google’s SRE Model - Executive Lessons

Key term: Site Reliability Engineering (SRE) - an engineering discipline that applies software principles to operations so services stay reliable at scale.

Why this matters

Over the last twenty years Google’s computing power has grown more than 1,000x, yet the company now “spends far less effort per server” while enjoying higher reliability. That dramatic efficiency gain did not come from bigger budgets or heroic on-call rotation - it flowed from a management system that treats reliability as a product feature and governs it with measurable targets. The core ideas behind Google’s SRE practice are portable to any modern digital business.

Leadership patterns you can borrow today

- Canary every change - Roll new code to a tiny slice of traffic first, measure real user impact, then advance in controlled waves. Teams that skip this step bet the whole brand on a single release. When YouTube missed a canary gate, a harmless-looking config tweak crippled video playback in 13 minutes and forced an apology to advertisers.

- Have a “Big Red Button” - For every critical rollout, define the one-click (or one-command) rollback before you start. Google stores the script in source control so it travels with the change. One engineer once yanked their laptop network cable to halt a runaway job - messy, but faster than a polished portal.

- Test recovery, not just features - Fire-drill mitigation scripts the same way you run unit tests. Practicing a risky load-shedding procedure during a calm maintenance window revealed missing permissions and shaved minutes off mean time to recovery during the next real outage.

- Diversify hardware tiers - A single device model across a global backbone turns one silicon bug into a company-wide outage. Google now runs multiple backbone designs and firmware versions so latent faults stay contained. The same logic applies to cloud regions - diversity is cheap insurance.

Boardroom diagnostic questions

Use these in your next operations review to surface strategic gaps:

- What fraction of production changes ship behind a canary or progressive rollout, and how is that trend moving quarter over quarter?

- For our top-revenue service, where is the documented Big Red Button and when was it last exercised end-to-end?

- When did we last run a full recovery drill that required no emergency shell access, and what did we learn?

- How many distinct hardware or cloud-zone variants protect our critical data path, and do we model correlated failure in board-level scenarios?

A clear, data-backed answer to each shows whether reliability is governed or guessed.

Quick pulse check

Pick one high-stakes service and schedule a brief tabletop walk-through this week: trace a plausible failure, locate the Big Red Button, and verify that the rollout pipeline can deliver a one-percent canary. The exercise takes under ten minutes yet exposes whether basic safety nets are in place. Capture action items, but defer major fixes for now - you can schedule those later.

By translating Google’s hard-won reliability habits into focused executive actions, you plant the first seeds of an SRE culture that scales effort down as your platform scales up, turning reliability from a defensive cost center into a strategic advantage.

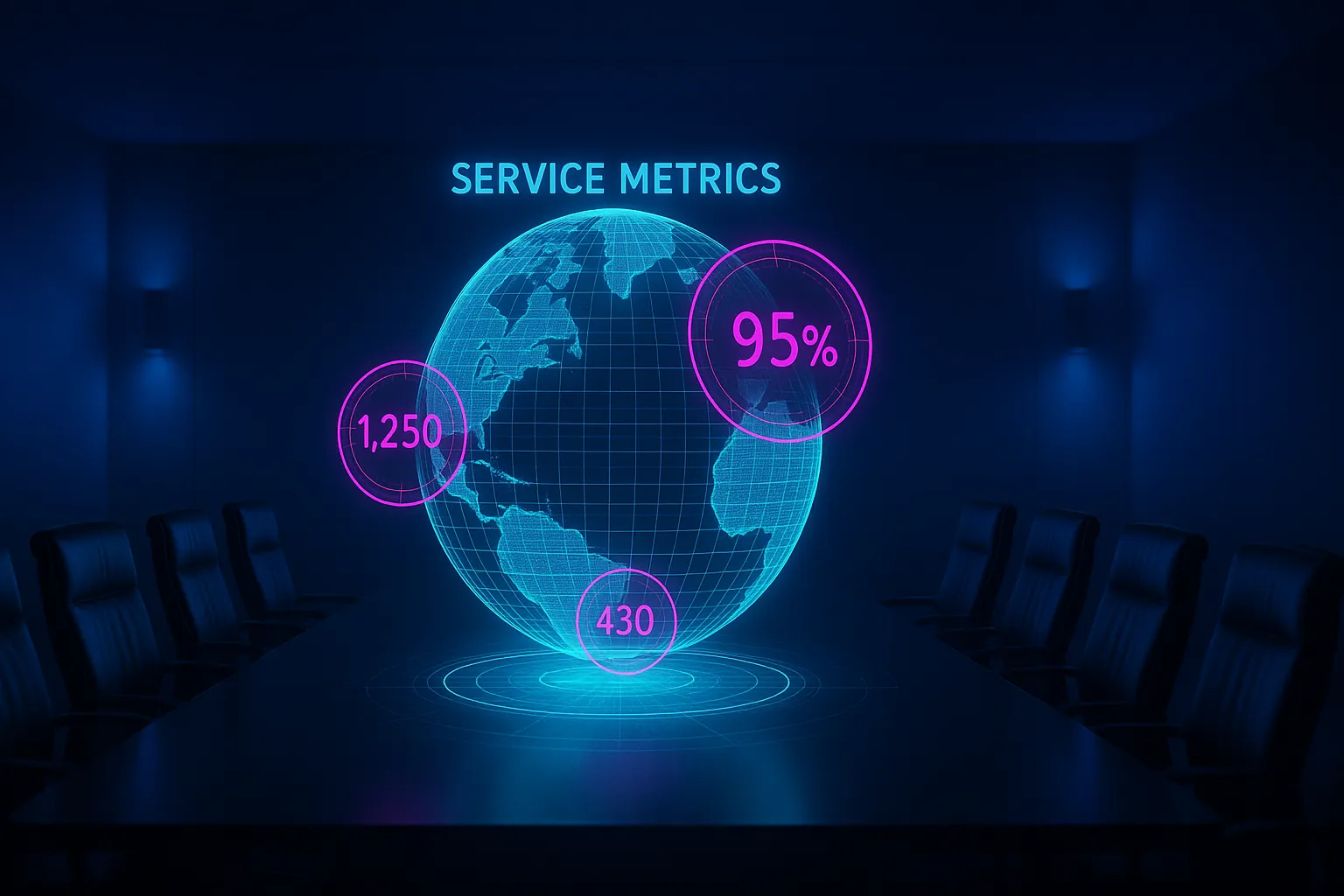

Service Level Objectives - Turning Reliability Into Revenue

Key term: Service Level Objective (SLO) - a target for how often a service meets a chosen reliability metric during a defined time window.

From downtime to lost deals

Picture a SaaS sales call during last quarter’s crunch. The prospect tries to sign up, but the login API stalls for eight seconds, then errors out. That single hiccup ended a $3 million annual contract because the executive buyer decided the platform “looked fragile.” The engineering team later discovered that log-in latency had been above its allowed limit for four of the previous six days - yet no alarm fired because the company had no SLO for that user-journey. Moments like this are why majority of organizations, not yet using SLOs, plan to adopt them in the next year and a half.

What makes an executive-grade SLO

The best objectives do three things at once:

- Expose true user experience - choose metrics that mirror customer perception, such as “95th-percentile checkout latency under 400 ms,” not CPU load.

- Map to dollars - link the metric to a real financial financial impact so leaders can weigh risk against revenue.

- Stay negotiable - bake an error budget (the tolerated slice of failure) into the target so product teams can trade reliability for speed when business value demands it.

DORA’s (DevOps Research and Assessment) decade of research reinforces this balance: the four classic delivery metrics measure velocity while SLOs watch stability - teams that optimize both outperform peers across growth and customer satisfaction.

The Classic 4: Deployment Frequency, Lead Time for Changes, Change Failure Rate, and Mean Time to Restore (MTTR)

Four common pitfalls to avoid

- Copy-pasting cloud SLAs – Provider numbers are marketing constructs, not user-centric goals.

- Tracking everything – A dashboard of 30 indicators hides the three that matter; pick one or two premier signals per service.

- Setting perfection targets – “100% uptime” leaves no room for safe innovation and drives alert fatigue. A realistic 99.9% availability target with a monthly budget of 43.2 minutes forces healthy prioritization.

- Forgetting executive ownership – If SLO curves live only in engineering reports, funding decisions will ignore them. Present error-budget burn right next to revenue targets in Quarterly Business Review decks so stakes stay visible.

Quick action - Draft a two-signal dashboard

Block fifteen minutes with your product and ops leads this week. On a whiteboard, sketch one availability SLO and one latency SLO for your flagship customer journey, each with its error budget plainly marked. Note who sees the dashboard and how over-budget alerts escalate. You will leave the room with a shared language for reliability trade-offs and a concrete next step for instrumentation.

By grounding reliability in user-visible objectives tied to money, you transform uptime from a fuzzy aspiration into a board-level lever - one that speeds features without gambling on customer trust.

Error Budgets - The CFO of Reliability

Key term: Error Budget - the allowable amount of unreliability a service can spend in a given period before work shifts from features to fixes.

Case in point

In early 2024 a consumer video platform rolled out a search-ranking tweak during peak traffic. Nine minutes later customer error rates had already burned through an entire month’s error budget, and the release pipeline locked automatically. Engineers spent the next hour rolling back and writing a post-mortem while marketing scrambled to calm advertisers. Nobody argued with the freeze - the budget had already told the story in dollars and trust. Google SREs have seen the same pattern: a single bad push can wipe out the 43-minute downtime allowance that comes with a 99.9% SLO.

Why executives should care

Error budgets are not an ops metric; they are an executive throttle on risk. Google’s data shows that roughly 70% of outages originate from changes initiated by people or pipelines. By forcing the business to “spend” reliability whenever it ships, the budget turns a fuzzy engineering trade-off into an accounting problem the whole C-suite understands: when the balance hits zero, velocity pauses automatically.

Three governance triggers that turn budgets into decisions

- Freeze on overspend - If the rolling four-week window is out of budget, halt all changes except security fixes until the service is back within its SLO.

- Mandatory incident review at 20% hit - A single incident that consumes more than 20% of the budget demands an executive-reviewed incident review with at least one top-priority corrective action.

- CTO tie-breaker - Disputes over budget calculation or exemptions escalate directly to the CTO, keeping accountability clear and fast.

Making the budget visible

A number sitting in Grafana will not change behavior. Leading teams surface budget burn right next to revenue and feature metrics in quarterly business reviews. One media company colors its roadmap slides green, yellow, or red depending on remaining budget, so product managers arrive already knowing whether the next campaign can launch or must wait for hardening.

Culture shift without new head count

Error budgets require no extra hiring or bespoke tooling. The numbers come straight from existing SLO dashboards; the policy fits on one page. What changes is the conversation: instead of ops pleading for caution, decision makers see a balance sheet everyone agreed to in advance. When the budget is healthy, product can move fast, confident that users have headroom for minor pain; when it shrinks, the organization slows down together - engineers, product, marketing, finance - until trust is restored.

By treating reliability like cash in the bank, error budgets give executives a simple, data-backed mechanism to balance innovation with stability. The result is faster feature delivery when customers can afford it and a disciplined pause when they cannot - a governance model that pays for itself every time a risky release is caught before it reaches every user.

Building an SRE Culture in Traditional Organizations

Key term: Blameless Post-Mortem - a post-incident review that focuses on learning and system fixes rather than assigning individual blame.

A story from the trenches

Standard Chartered Bank began its SRE journey with a five-person pilot team assigned to just five critical applications. Twelve months later that single experiment had expanded across 200 systems and became the bank’s default support model, thanks to executive sponsorship from the Group CIO and a relentless focus on learning-from-incidents over finger-pointing . Their experience mirrors the broader industry signal: 47% of SRE leaders say “learning from incidents” is the weakest link in their reliability practice. If nearly half the field still struggles to convert outages into usable knowledge, legacy culture - not tooling - is the primary obstacle.

The hidden blockers you must surface

- Hierarchy inertia stalls truth-telling in war rooms; junior engineers hesitate to challenge senior voices even when dashboards prove a different root cause.

- Functional silos keep development, operations, and customer success from sharing the same timeline of an incident, so lessons fragment and repetition creeps in.

- Punitive metrics such as “number of tickets closed” reward speed over insight, discouraging the deep analysis that prevents repeat failures.

Three culture enablers with outsized ROI

- Executive-visible incident reviews – Lowe’s retail platform reduced mean time to recovery by more than 80% only after weekly post-mortem meetings became mandatory for directors and VPs, turning learning into a leadership ritual.

- Psychological safety as a stated policy – Publish a brief charter that forbids blame, emphasizes system thinking, and guarantees career protection for anyone who surfaces risk.

- Progressive rollout guardrails – When teams know a 1% canary and automated rollback stand between them and customers, they are more willing to experiment - and to admit near misses before they become headlines.

Five-play executive game plan

Key term: Failure injection - is a testing technique that deliberately introduces errors or failures into a system to assess its resilience, error handling, and overall robustness.

Rather than another bullet list, envision the following plays as a sequential offensive drive. Each builds momentum for the one that follows.

Play 1 – Broadcast the “why.” Kick off with a 15-minute town-hall segment where the COO retells a recent near-miss, quantifying the revenue that almost evaporated. This anchors reliability in business reality.

Play 2 – Flip the metrics. Replace ticket-closure quotas with a single KPI: “percentage of incidents that generate a completed blameless post-mortem within five working days.” Ownership shifts from fast fixes to lasting fixes.

Play 3 – Stage a live rehearsal. Run a no-notice failure injection on a non-customer-facing service. Let teams practice the new review flow in a low-stakes arena, then iterate the checklist.

Play 4 – Celebrate the first hard lesson. When the next real incident surfaces a design flaw, promote the engineer who wrote the candid analysis on the company Slack. Culture changes when careers advance because of transparency, not heroics.

Play 5 – Institutionalize learning. After three full post-mortems, compile recurring themes into an “incident patterns” page on the internal wiki. Require product and finance leaders to skim it before approving roadmap bets that add risk.

Closing thoughts

Culture work rarely shows up on a Gantt chart, yet it is the multiplier on every reliability dollar you spend. One financial CIO put it bluntly after adopting these plays: “We didn’t buy a monitoring suite; we bought ourselves honesty.” Start with Play 1 this week and you set the tone that reliability is everyone’s job - and that learning, not blame, is the path to competitive advantage.